- 15 Oct 2024

- 2 Minutes to read

- Print

- DarkLight

Hayabusa to BigQuery

- Updated on 15 Oct 2024

- 2 Minutes to read

- Print

- DarkLight

Overview

Our BigQuery output allows you to send Hayabusa analysis results to a BigQuery table allowing SQL-like queries against the data. This allows you to perform analysis at scale against massive datasets. For guidance on using Hayabusa within LimaCharlie, see Hayabusa Extension.

Imagine you wanted to analyze event logs from 10s, 100s, or 1000s of systems using Hayabusa. You have a couple options:

1. Send the resulting CSV artifact to another platform, like Timesketch, for further analysis, as the CSV generated by Hayabusa in LimaCharlie is compatible with Timesketch

2. Run queries against all of the data returned by Hayabusa in BigQuery

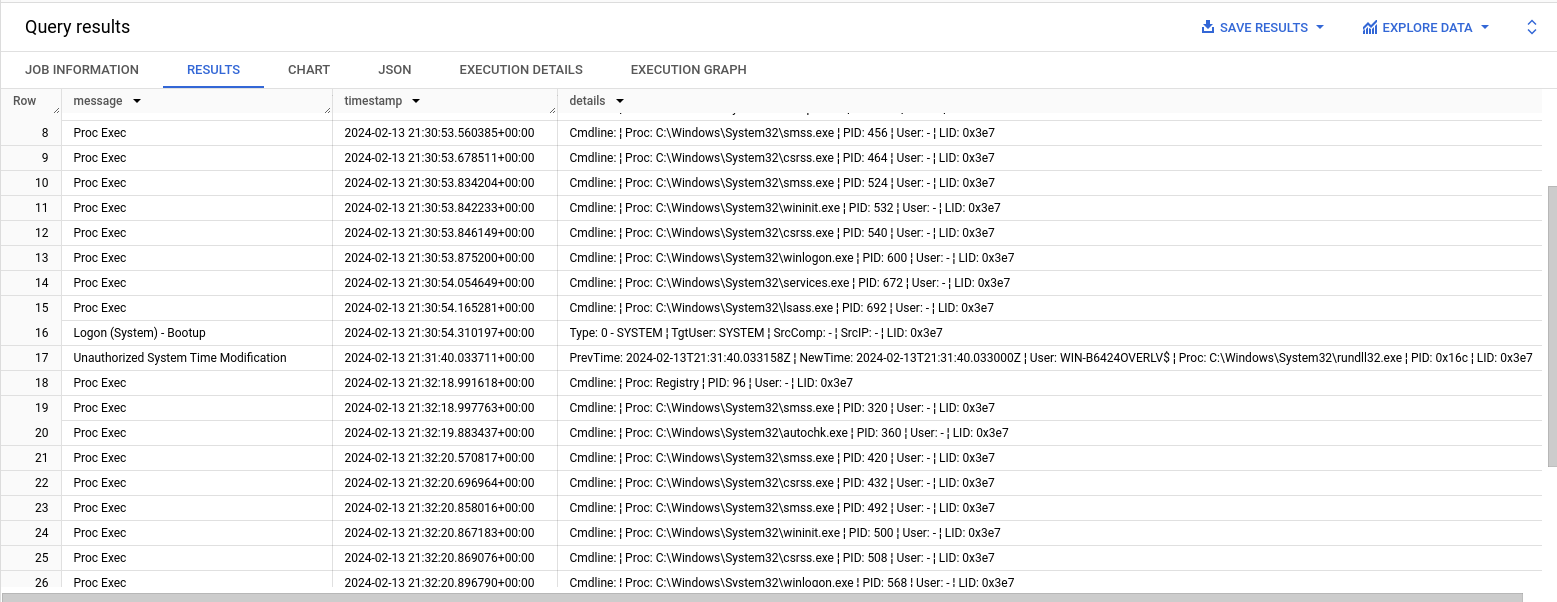

BigQuery dataset containing Hayabusa results:

Steps to Accomplish

You will need a Google Cloud project

You will need to create a service account within your Google Cloud project

Navigate to your project

Navigate to IAM

Navigate to Service Accounts > Create Service Account

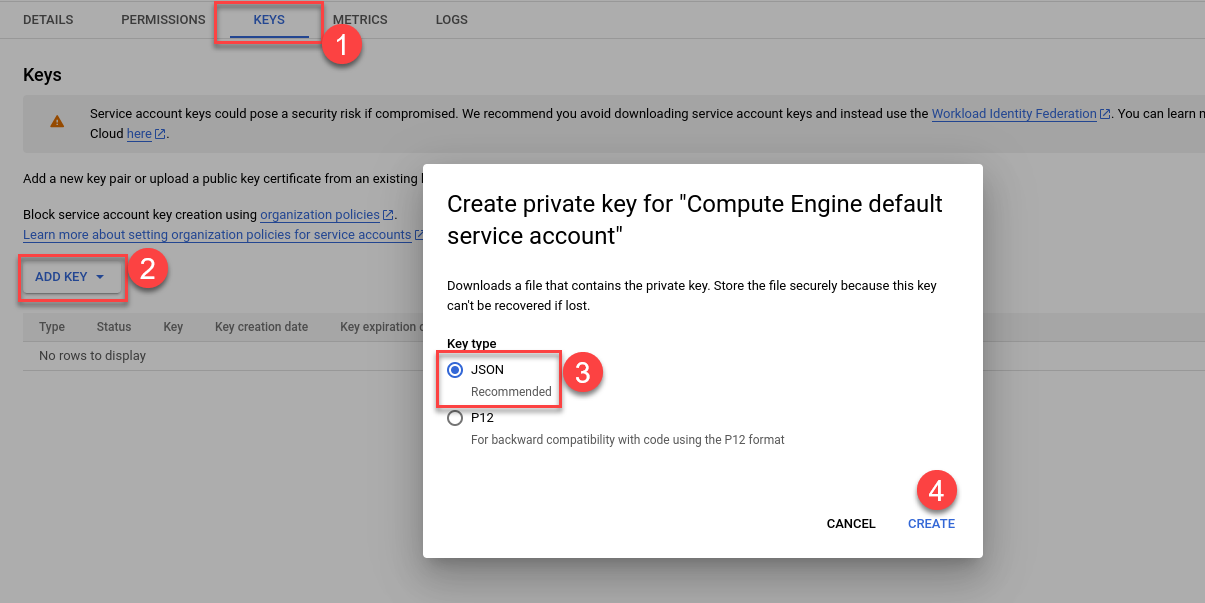

Click on newly created Service Account and create a new key

This will provide you with the JSON format secret key you will later setup in your LimaCharlie output.

In BigQuery, create a Dataset, Table, & Schema similar to the screenshot below. Keep in mind, the name of your dataset and table are arbitrary but they need to match what you configure in your output in LimaCharlie.

Project -

your_project_nameDataset -

hayabusaTable -

hayabusaSchema -

computer:STRING, message:STRING, timestamp:STRING, details:STRING, channel:STRING, event_id:STRING, level:STRING, mitre_tactics:STRING, mitre_tags:STRING, extra:STRINGNote that this can be any of the fields from the Hayabusa event that you wish to use. This schema and transform are based on the CSV output using the

timesketch-verboseprofile.

Now we're ready to create our LimaCharlie Events Output

In the side navigation menu, click "Outputs" then add a new ouput

Output stream: Events

Destination: Google Cloud BigQuery

Name:

hayabusa-bigqueryYou can change this, but it affects a subsequent step so take note of the output name

schema:

computer:STRING, message:STRING, timestamp:STRING, details:STRING, channel:STRING, event_id:STRING, level:STRING, mitre_tactics:STRING, mitre_tags:STRING, extra:STRINGNote that this can be any of the fields from the Hayabusa event that you wish to use. This schema and transform are based on the CSV output using the

timesketch-verboseprofile.

Dataset: whatever you named BQ your dataset above

Table: whatever you named your BQ table above

Project: your GCP project name

Secret Key: provide the JSON secret key for your GCP service account

Advanced Options

Custom Transform: paste in this JSON

Note that this can be any of the fields from the Hayabusa event that you wish to use. This schema and transform are based on the CSV output using the

timesketch-verboseprofile.

{ "channel": "event.results.Channel", "computer": "event.results.Computer", "message": "event.results.message", "timestamp": "event.results.datetime", "details": "event.results.Details", "event_id": "event.results.EventID", "level": "event.results.Level", "mitre_tactics": "event.results.MitreTactics", "mitre_tags": "event.results.MitreTags", "extra": "event.results.ExtraFieldInfo", }Specific Event Types:

hayabusa_eventSensor:

ext-hayabusa

You are now ready to send Hayabusa events to BigQuery!